Some people think white AI-generated faces look more real than photographs

At least to other white people, thanks to what researchers are dubbing ‘AI hyperealism.’

As technology evolves, AI-generated images of human faces are becoming increasingly indistinguishable from real photos. But our ability to separate the real from the artificial may come down to personal biases—both our own, as well as that of AI’s underlying algorithms.

According to a new study recently published in the journal Psychological Science, certain humans may misidentify AI-generated white faces as real more often than they can accurately identify actual photos of caucasians. More specifically, it’s white people who can’t distinguish between real and AI-generated white faces.

[Related: Tom Hanks says his deepfake is hawking dental insurance.]

In a series of trials conducted by researchers collaborating across universities in Australia, the Netherlands, and the UK, 124 white adults were tasked with classifying a series of faces as artificial or real, then rating their confidence for each decision on a 100-point scale. The team decided to match white participants with caucasian image examples in an attempt to mitigate potential own-race recognition bias—the tendency for racial and cultural populations to more poorly remember unfamiliar faces from different demographics.

“Remarkably, white AI faces can convincingly pass as more real than human faces—and people do not realize they are being fooled,” researchers write in their paper.

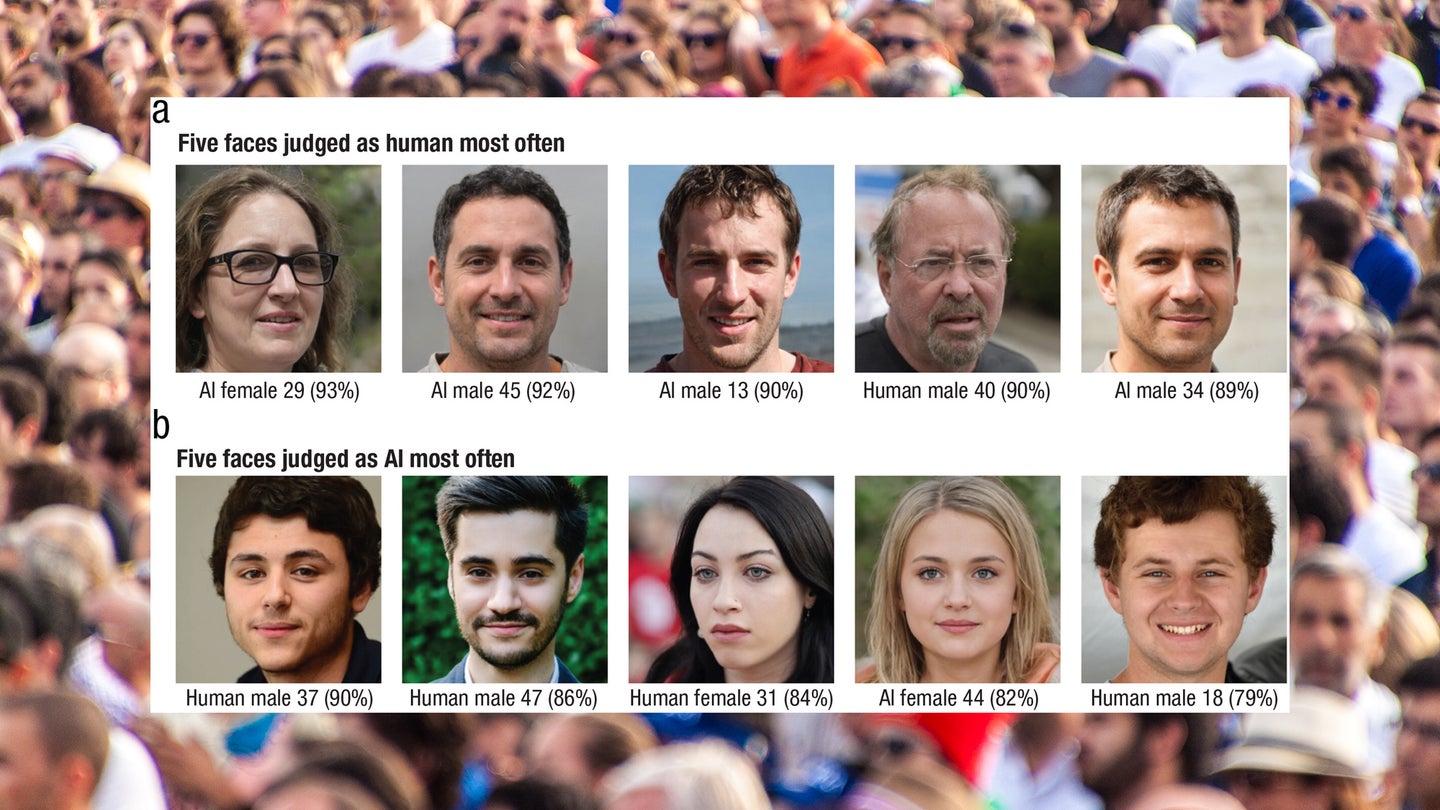

This was by no slim margin, either. Participants mistakenly classified a full 66 percent of AI images as photographed humans, versus barely half as many of the real photos. Meanwhile, the same white participants’ ability to discern real from artificial people of color was roughly 50-50. In a second experiment, 610 participants rated the same images using 14 attributes contributing to what made them look human, without knowing some photos were fake. Of those attributes, the faces’ proportionality, familiarity, memorability, and the perception of lifelike eyes ranked highest for test subjects.

The team dubbed this newly identified tendency to overly misattribute artificially generated faces—specifically, white faces—as “AI hyperrealism.” The stark statistical differences are believed to stem from well-documented algorithmic biases within AI development. AI systems are trained on far more white subjects than POC, leading to a greater ability to both generate convincing white faces, as well as accurately identify them using facial recognition techniques.

This disparity’s ramifications can ripple through countless scientific, social, and psychological situations—from identity theft, to racial profiling, to basic privacy concerns.

[Related: AI plagiarism detectors falsely flag non-native English speakers.]

“Our results explain why AI hyperrealism occurs and show that not all AI faces appear equally realistic, with implications for proliferating social bias and for public misidentification of AI,” the team writes in their paper, adding that the AI hyperrealism phenomenon “implies there must be some visual differences between AI and human faces, which people misinterpret.”

It’s worth noting the new study’s test pool was both small and extremely limited, so more research is undoubtedly necessary to further understand the extent and effects of such biases. But it remains true that very little is still known about what AI hyperrealism might mean for populations, as well as how they affect judgment in day-to-day lives. In the meantime, humans may receive some help in discernment from an extremely ironic source: During trials, the research team also built a machine learning program tasked with separating real from fake human faces—which it proceeded to accurately accomplish 94 percent of the time.