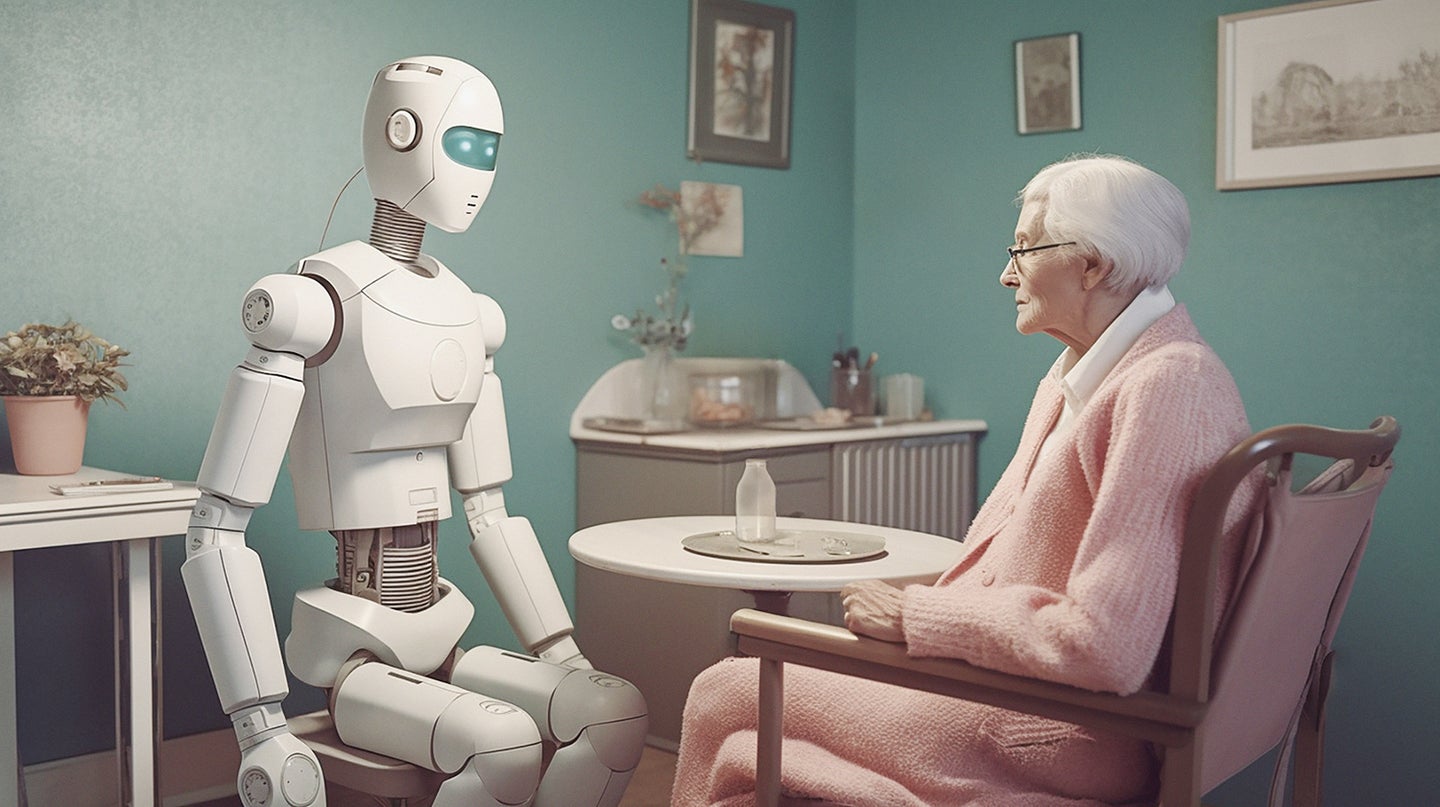

Will we ever be able to trust health advice from an AI?

Medical AI chatbots have the potential to counsel patients, but wrong replies and biased care remain major risks.

IF A PATIENT KNEW their doctor was going to give them bad information during an upcoming appointment, they’d cancel immediately. Generative artificial intelligence models such as ChatGPT, however, frequently “hallucinate”—tech industry lingo for making stuff up. So why would anyone want to use an AI for medical purposes?

Here’s the optimistic scenario: AI tools get trained on vetted medical literature, as some models in development already do, but they also scan patient records and smartwatch data. Then, like other generative AI, they produce text, photos, and even video—personalized to each user and accurate enough to be helpful. The dystopian version: Governments, insurance companies, and entrepreneurs push flawed AI to cut costs, leaving patients desperate for medical care from human clinicians.

Right now, it’s easy to imagine things going wrong, especially because AI has already been accused of spewing harmful advice online. In late spring, the National Eating Disorders Association temporarily disabled its chatbot after a user claimed it encouraged unhealthy diet habits. But people in the US can still download apps that use AI to evaluate symptoms. And some doctors are trying to use the technology, despite its underlying problems, to communicate more sympathetically with patients.

ChatGPT and other large language models are “very confident, they’re very articulate, and they’re very often wrong,” says Mark Dredze, a professor of computer science at Johns Hopkins University. In short, AI has a long way to go before people can trust its medical tips.

Still, Dredze is optimistic about the technology’s future. ChatGPT already gives advice that’s comparable to the recommendations physicians offer on Reddit forums, his newly published research has found. And future generative models might complement trips to the doctor, rather than replace consults completely, says Katie Link, a machine-learning engineer who specializes in healthcare for Hugging Face, an open-source AI platform. They could more thoroughly explain treatments and conditions after visits, for example, or help prevent misunderstandings due to language barriers.

In an even rosier outlook, Oishi Banerjee, an artificial intelligence and healthcare researcher at Harvard Medical School, envisions AI systems that would weave together multiple data sources. Using photos, patient records, information from wearable sensors, and more, they could “deliver good care anywhere to anyone,” she says. Weird rash on your arm? She imagines a dermatology app able to analyze a photo and comb through your recent diet, location data, and medical history to find the right treatment for you.

As medical AI develops, the industry must keep growing amounts of patient data secure. But regulators can lay the groundwork now for responsible progress, says Marzyeh Ghassemi, who leads a machine-learning lab at MIT. Many hospitals already sell anonymized patient data to tech companies such as Google; US agencies could require them to add that information to national data sets to improve medical AI models, Ghassemi suggests. Additionally, federal audits could review the accuracy of AI tools used by hospitals and medical groups and cut off valuable Medicare and Medicaid funding for substandard software. Doctors shouldn’t just be handed AI tools, either; they should receive extensive training on how to use them.

It’s easy to see how AI companies might tempt organizations and patients to sign up for services that can’t be trusted to produce accurate results. Lawmakers, healthcare providers, tech giants, and entrepreneurs need to move ahead with caution. Lives depend on it.

Read more about life in the age of AI:

- Why AI could be a big problem for the 2024 presidential election

- The logic behind AI chatbots is surprisingly basic

- This AI program could teach you to be better at chess

- The first AI started a 70-year debate

Or check out all of our PopSci+ stories.